Half of LLM users (49%) think the models they use are smarter than they are, including 26% who think their LLMs are “a lot smarter.” Another 18% think LLMs are as smart as they are. Here are some of the other attributes they see:

- Confident: 57% say the main LLM they use seems to act in a confident way.

- Reasoning: 39% say the main LLM they use shows the capacity to think and reason at least some of the time.

- Sense of humor: 32% say their main LLM seems to have a sense of humor.

- Morals: 25% say their main model acts like it makes moral judgments about right and wrong at least sometimes. Sarcasm: 17% say their prime LLM seems to respond sarcastically.

- Sad: 11% say the main model they use seems to express sadness, while 24% say that model also expresses hope.

What that overwhelming, uncritical, capitalist propaganda do…

I had to tell a bunch of librarians that LLMs are literally language models made to mimic language patterns, and are not made to be factually correct. They understood it when I put it that way, but librarians are supposed to be “information professionals”. If they, as a slightly better trained subset of the general public, don’t know that, the general public has no hope of knowing that.

People need to understand it’s a really well-trained parrot that has no idea what is saying. That’s why it can give you chicken recipes and software code; it’s seen it before. Then it uses statistics to put words together that usually appear together. It’s not thinking at all despite LLMs using words like “reasoning” or “thinking”

It’s so weird watching the masses ignore industry experts and jump on weird media hype trains. This must be how doctors felt in Covid.

It’s so weird watching the masses ignore industry experts and jump on weird media hype trains.

Is it though?

I’m the expert in this situation and I’m getting tired explaining to Jr Engineers and laymen that it is a media hype train.

I worked on ML projects before they got rebranded as AI. I get to sit in the room when these discussion happen with architects and actual leaders. This is Hype. Anyone who tells you other wise is lying or selling you something.

I see how that is a hype train, and I also work with machine learning (though I’m far from an expert), but I’m not convinced these things are not getting intelligent. I know what their problems are, but I’m not sure whether the human brain works the same way, just (yet) more effective.

That is, we have visual information, and some evolutionary BIOS, while LLMs have to read the whole internet and use a power plant to function - but what if our brains are just the same bullshit generators, we are just unaware of it?

I work in an extremely related field and spend my days embedded into ML/AI projects. I’ve seen teams make some cool stuff and I’ve seen teams make crapware with “AI” slapped on top. I guarantee you that you are wrong.

What if our brains…

There’s the thing- you can go look this information up. You don’t have to guess. This information is readily available to you.

LLMs work by agreeing with you and stringing together coherent text in patterns the recognize from huge samples. It’s not particularly impressive and is far, far closer to the initial chat bots from last century than they do real GAI or some sort of singularity. The limits we’re at now are physical. Look up how much electricity and water it takes just to do trivial queries. Progress has plateaued as it frequently does with tech like this. That’s okay, it’s still a neat development. The only big takeaway from LLMs is that agreeing with people makes them think you’re smart.

In fact, LLMs are a glorified Google at higher levels of engineering. When most of the stuff you need to do doesn’t have a million stack overflow articles to train on it’s going to be difficult to get an LLM to contribute in any significant way. I’d go so far to say it hasn’t introduced any tool I didn’t already have. It’s just mildly more convenient than some of them while the costs are low.

Librarians went to school to learn how to keep order in a library. That does not inherently make them have more information in their heads than the average person, especially regarding things that aren’t books and book organization.

Librarians go to school to learn how to manage information, whether it is in book format or otherwise. (We tend to think of libraries as places with books because, for so much of human history, that’s how information was stored.)

They are not supposed to have more information in their heads, they are supposed to know how to find (source) information, catalogue and categorize it, identify good information from bad information, good information sources from bad ones, and teach others how to do so as well.

moron opens encyclopedia “Wow, this book is smart.”

If it’s so smart, why is it just laying around on a bookshelf and not working a job to pay rent?

"Half of LLM users " beleive this. Which is not to say that people who understand how flawed LLMs are, or what their actual function is, do not use LLMs and therefore arent i cluded in this statistic?

This is kinda like saying ‘60% of people who pay for their daily horoscope beleive it is an accurate prediction’.LLMs are made to mimic how we speak, and some can even pass the Turing test, so I’m not surprised that people who don’t know better think of these LLMs as conscious in some way or another.

It’s not a necessarily a fault on those people, it’s a fault on how LLMs are purposefully misadvertised to the masses

The funny thing about this scenario is by simply thinking that’s true, it actually becomes true.

deleted by creator

Large language model. It’s what all these AI really are.

deleted by creator

I’m 100% certain that LLMs are smarter than half of Americans. What I’m not so sure about is that the people with the insight to admit being dumber than an LLM are the ones who really are.

A daily bite of horror.

I wouldn’t be surprised if that is true outside the US as well. People that actually (have to) work with the stuff usually quickly learn, that its only good at a few things, but if you just hear about it in the (pop-, non-techie-)media (including YT and such), you might be deceived into thinking Skynet is just a few years away.

It’s a one trick pony.

That trick also happens to be a really neat trick that can make people think it’s a swiss army knife instead of a shovel.

Two things can be true at once! Though I suppose it depends on what you define as “a few.”

Am American.

…this is not the flex that the article writer seems to think it is.

You say this like this is wrong.

Think of a question that you would ask an average person and then think of what the LLM would respond with. The vast majority of the time the llm would be more correct than most people.

A good example is the post on here about tax brackets. Far more Republicans didn’t know how tax brackets worked than Democrats. But every mainstream language model would have gotten the answer right.

I bet the LLMs also know who pays tarrifs

Memory isn’t intelligence.

Then asking it a logic question. What question are you asking that the llms are getting wrong and your average person is getting right? How are you proving intelligence here?

LLMs are an autocorrect.

Let’s use a standard definition like “intelligence is the ability to acquire, understand, and use knowledge.”

It can acquire (learn) and use (access, output) data but it lacks the ability to understand it.

This is why we have AI telling people to use glue on pizza or drink bleach.

I suggest you sit down with an AI some time and put a few versions of the Trolley Problem to it. You will likely see what is missing.

I think this all has to do with how you are going to compare and pick a winner in intelligence. the traditional way is usually with questions which llms tend to do quite well at. they have the tendency to hallucinate, but the amount they hallucinate is less than the amount they don’t know in my experience.

The issue is really all about how you measure intelligence. Is it a word problem? A knowledge problem? A logic problem?.. And then the issue is, can the average person get your question correct? A big part of my statement here is at the average person is not very capable of answering those types of questions.

In this day and age of alternate facts and vaccine denial, science denial, and other ways that your average person may try to be intentionally stupid… I put my money on an llm winning the intelligence competition versus the average person. In most cases I think the llm would beat me in 90% of the topics.

So, the question to you, is how do you create this competition? What are the questions you’re going to ask that the average person’s going to get right and the llm will get wrong?

I have already suggested it. Trolley problems etc. Ask it to tell you its reasoning. It always becomes preposterous sooner or later.

My point here is that remembering the correct answer or performing a mathematical calculation are not a measure of understanding.

What we are looking for that sets apart INTELLIGENCE is an ability to genuinely understand. LLMs don’t have that, any more than older autocorrects did.

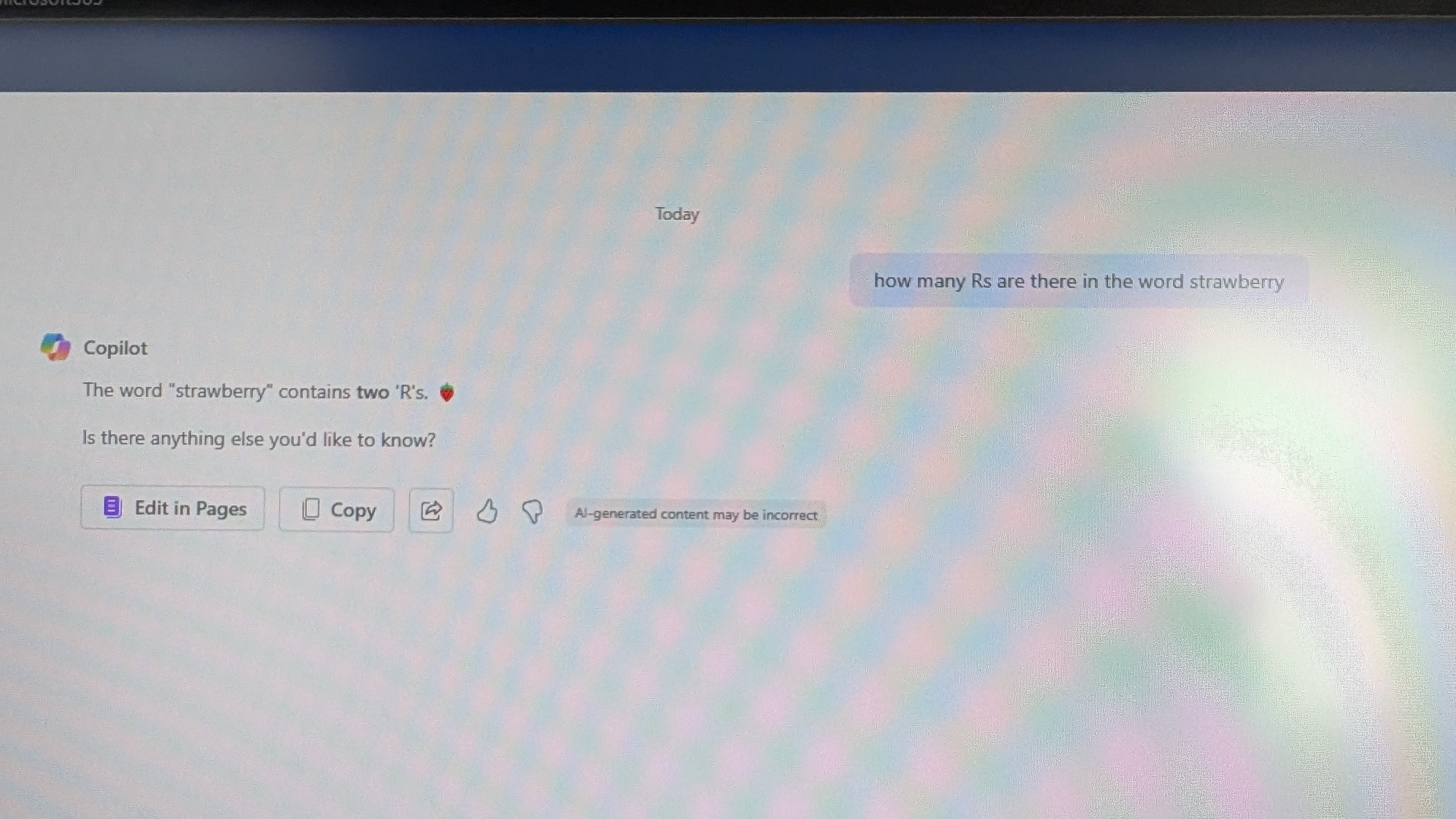

How many Rs are there in the word strawberry?

That was a very long time ago, that’s fine now

I know it looks like I’m shitting on LLMs but really just trying to highlight they still have gaps on reasoning that they’ll probably fix in this decade.

I asked gemini and ChatGPT (the free one) and they both got it right. How many people do you think would get that right if you didn’t write it down in front of them? If Copilot gets it wrong, as per eletes’ post, then the AI success rate is 66%. Ask your average person walking down the street and I don’t think you would do any better. Plus there are a million questions that the LLMs would vastly out perform your average human.

I think you might know some really stupid or perhaps just uneducated people. I would expect 100% of people to know how many Rs there are in “strawberry” without looking at it.

Nevertheless, spelling is memory and memory is not intelligence.

They’re right. AI is smarter than them.

Don’t they reflect how you talk to them? Ie: my chatgpt doesn’t have a sense of humor, isn’t sarcastic or sad. It only uses formal language and doesn’t use emojis. It just gives me ideas that I do trial and error with.

removed by mod

Still better than reddit users…

removed by mod

I try not to think about them, honestly. (งツ)ว

removed by mod