I’m just praying people will fucking quit it with the worries that we’re about to get SKYNET or HAL when binary computing would inherently be incapable of recreating the fast pattern recognition required to replicate or outpace human intelligence.

Moore’s law is about similar computing power, which is a measure of hardware performance, not of the software you can run on it.

Unfortunately it’s part of the marketing, thanks OpenAI for that “Oh no… we can’t share GPT2, too dangerous” then… here it is. Definitely interesting then but now World shattering. Same for GPT3 … but through exclusive partnership with Microsoft, all closed, rinse and repeat for GPT4. It’s a scare tactic to lock what was initially open, both directly and closing the door behind them through regulation, at least trying to.

Why you gotta invent a new hardware just to speed up ai augh these companies

Personally I can’t wait for a few good bankruptcies so I can pick up a couple of high end data centre GPUs for cents on the dollar

Search Nvidia p40 24gb on eBay, 200$ each and surprisingly good for selfhosted llm, if you plan to build array of gpus then search for p100 16gb, same price but unlike p40, p100 supports nvlink, and these 16gb is hbm2 memory with 4096bit bandwidth so it’s still competitive in llm field while p40 24gb is gddr5 so it’s good point is amount of memory for money it cost but it’s rather slow compared to p100 and compared to p100 it doesn’t support nvlink

Personally I don’t much for the LLM stuff, I’m more curious how they perform in Blender.

Interesting, I did try a bit of remote rendering on Blender (just to learn how to use via CLI) so that makes me wonder who is indeed scrapping the bottom of the barrel of “old” hardware and what they are using for. Maybe somebody is renting old GPUs for render farms, maybe other tasks, any pointer of such a trend?

Thanks for the tips! I’m looking for something multi-purpose for LLM/stable diffusion messing about + transcoder for jellyfin - I’m guessing that there isn’t really a sweet spot for those 3. I don’t really have room or power budget for 2 cards, so I guess a P40 is probably the best bet?

Intel a310 is the best $/perf transcoding card, but if P40 supports nvenc, it might work for both transcode and stable diffusion.

Try ryzen 8700g integrated gpu for transcoding since it supports av1 and these p series gpus for llm/stable diffusion, would be a good mix i think, or if you don’t have budget for new build, then buy intel a380 gpu for transcoding, you can attach it as mining gpu through pcie riser, linus tech tips tested this gpu for transcoding as i remember

8700g

Hah, I’ve pretty recently picked up an Epyc 7452, so not really looking for a new platform right now.

The Arc cards are interesting, will keep those in mind

Lowest price on Ebay for me is 290 Euro :/ The p100 are 200 each though.

Do you happen to know if I could mix a 3700 with a p100?

And thanks for the tips!

Ryzen 3700? Or rtx 3070? Please elaborate

Oh sorry, nvidia RTX :) Thanks!

I looked it up, rtx 3070 have nvlink capabilities though i wonder if all of them have it, so you can pair it if it have nvlink capabilities

Digging into it a bit more, it seems like I might be better off getting a 12gb 3060 - similar price point, but much newer silicon

It depends, if you want to run llm data center gpus are better, if you want to run general purpose tasks then newer silicon is better, in my case i prefer build to offload tasks, since I’m daily driving linux, my dream build is main gpu is amd rx 7600xt 16gb, Nvidia p40 for llms and ryzen 8700g 780m igpu for transcoding and light tasks, that way you’ll have your usual gaming home pc that also serves as a server in the background while being used

Can it run crysis?

How about cyberpunk?

I ran doom on a GPU!

My only real hope out of this is that that copilot button on keyboards becomes the 486 turbo button of our time.

Meaning you unpress it, and computer gets 2x faster?

Actually you pressed it and everything got 2x slower. Turbo was a stupid label for it.

I could be misremembering but I seem to recall the digits on the front of my 486 case changing from 25 to 33 when I pressed the button. That was the only difference I noticed though. Was the beige bastard lying to me?

It varied by manufacturer.

Some turbo = fast others turbo = slow.

Lying through its teeth.

There was a bunch of DOS software that runs too fast to be usable on later processors. Like a Rouge-like game where you fly across the map too fast to control. The Turbo button would bring it down to 8086 speeds so that stuff is usable.

Damn. Lol I kept that turbo button down all the time, thinking turbo = faster. TBF to myself it’s a reasonable mistake! Mind you, I think a lot of what slowed that machine was the hard drive. Faster than loading stuff from a cassette tape but only barely. You could switch the computer on and go make a sandwich while windows 3.1 loads.

Actually you used it correctly. The slowdown to 8086 speeds was applied when the button was unpressed.

When the button was pressed the CPU operated at its normal speed.

On some computers it was possible to wire the button to act in reverse (many people did not like having the button be “on” all the time, as they did not use any 8086 apps), but that was unusual. I believe that’s was the case with OPs computer.

Oh, yeah, a lot of people made that mistake. It was badly named.

TIL, way too late! Cheers mate

That’s… the same thing.

Whops, I thought you were responding to the first child comment.

I was thinking pressing it turns everything to shit, but that works too. I’d also accept, completely misunderstood by future generations.

Well now I wanna hear more about the history of this mystical shit button

Back in those early days many applications didn’t have proper timing, they basically just ran as fast as they could. That was fine on an 8mhz cpu as you probably just wanted stuff to run as fast as I could (we weren’t listening to music or watching videos back then). When CPUs got faster (or it could be that it started running at a multiple of the base clock speed) then stuff was suddenly happening TOO fast. The turbo button was a way to slow down the clock speed by some amount to make legacy applications run how it was supposed to run.

Most turbo buttons never worked for that purpose, though, they were still way too fast Like, even ignoring other advances such as better IPC (or rather CPI back in those days) you don’t get to an 8MHz 8086 by halving the clock speed of a 50MHz 486. You get to 25MHz. And practically all games past that 8086 stuff was written with proper timing code because devs knew perfectly well that they’re writing for more than one CPU. Also there’s software to do the same job but more precisely and flexibly.

It probably worked fine for the original PC-AT or something when running PC-XT programs (how would I know our first family box was a 386) but after that it was pointless. Then it hung on for years, then it vanished.

A.I., Assumed Intelligence

More like PISS, a Plagiarized Information Synthesis System

I’ve noticed people have been talking less and less about AI lately, particularly online and in the media, and absolutely nobody has been talking about it in real life.

The novelty has well and truly worn off, and most people are sick of hearing about it.

The hype is still percolating, at least among the people I work with and at the companies of people I know. Microsoft pushing Copilot everywhere makes it inescapable to some extent in many environments, there’s people out there who have somehow only vaguely heard of ChatGPT and are now encountering LLMs for the first time at work and starting the hype cycle fresh.

Yeah, now we are gonna get the reality of deep fakes; fun times.

It’s like 3D TVs, for a lot of consumer applications tbh

Oh fuck that’s right, that was a thing.

Goddamn

3D has been a thing every 15 years or so

3D TVs were a commercial fad once and I haven’t seen them in forever.

2016 may have been the end of them

Yes but 3D is always a thing periodically.

I used shutter glasses with two voodoo2 cards…

I used shutter glasses with the sega master system back in 87. They were rad af

Yes, exactly.

So should we be fearing a new crash?

Do you have money and/or personal emotional validation tied up in the promise that AI will develop into a world-changing technology by 2027? With AGI in everyone’s pocket giving them financial advice, advising them on their lives, and romancing them like a best friend with Scarlett Johansson’s voice whispering reassurances in your ear all day?

If you are banking on any of these things, then yeah, you should probably be afraid.

Welp, it was ‘fun’ while it lasted. Time for everyone to adjust their expectations to much more humble levels than what was promised and move on to the next sceme. After Metaverse, NFTs and ‘Don’t become a programmer, AI will steal your job literally next week!11’, I’m eager to see what they come up with next. And with eager I mean I’m tired. I’m really tired and hope the economy just takes a damn break from breaking things.

I just hope I can buy a graphics card without having to sell organs some time in the next two years.

If there is even a GPU being sold. It’s much more profitable for Nvidia to just make compute focused chips than upgrading their gaming lineup. GeForce will just get the compute chips rejects and laptop GPU for the lower end parts. After the AI bubble burst, maybe they’ll get back to their gaming roots.

Don’t count on it. It turns out that the sort of stuff that graphics cards do is good for lots of things, it was crypto, then AI and I’m sure whatever the next fad is will require a GPU to run huge calculations.

AI is shit but imo we have been making amazing progress in computing power, just that we can’t really innovate atm, just more race to the bottom.

——

I thought capitalism bred innovation, did tech bros lied?

/s

I’m sure whatever the next fad is will require a GPU to run huge calculations.

I also bet it will, cf my earlier comment on rendering farm and looking for what “recycles” old GPUs https://lemmy.world/comment/12221218 namely that it makes sense to prepare for it now and look for what comes next BASED on the current most popular architecture. It might not be the most efficient but probably will be the most economical.

I’d love an upgrade for my 2080 TI, really wish Nvidia didn’t piss off EVGA into leaving the GPU business…

My RX 580 has been working just fine since I bought it used. I’ve not been able to justify buying a new (used) one. If you have one that works, why not just stick with it until the market gets flooded with used ones?

AI doesn’t need to steal all programmer jobs next week, but I have much doubt there will still be many available in 2044 when even just LLMs still have so many things that they can improve on in the next 20 years.

But if it doesn’t disrupt it isn’t worth it!

/s

move on to the next […] eager to see what they come up with next.

That’s a point I’m making in a lot of conversations lately : IMHO the bubble didn’t pop BECAUSE capital doesn’t know where to go next. Despite reports from big banks that there is a LOT of investment for not a lot of actual returns, people are still waiting on where to put that money next. Until there is such a place, they believe it’s still more beneficial to keep the bet on-going.

The more you bAI

deleted by creator

I’ve spent time with an AI laptop the past couple of weeks and ‘overinflated’ seems a generous description of where end user AI is today.

I don’t think AI is ever going to completely disappear, but I think we’ve hit the barrier of usefulness for now.

I find it insane when “tech bros” and AI researchers at major tech companies try to justify the wasting of resources (like water and electricity) in order to achieve “AGI” or whatever the fuck that means in their wildest fantasies.

These companies have no accountability for the shit that they do and consistently ignore all the consequences their actions will cause for years down the road.

What’s funny is that we already have general intelligence in billions of brains. What tech bros want is a general intelligence slave.

Well put.

I’m sure plenty of people would be happy to be a personal assistant for searching, summarizing, and compiling information, as long as they were adequately paid for it.

It’s research. Most of it never pans out, so a lot of it is “wasteful”. But if we didn’t experiment, we wouldn’t find the things that do work.

Most of the entire AI economy isn’t even research. It’s just grift. Slapping a label on ChatGPT and saying you’re an AI company. It’s hustlers trying to make a quick buck from easy venture capital money.

You can probably say the same about all fields, even those that have formal protections and regulations. That doesn’t mean that there aren’t people that have PhD’s in the field and are trying to improve it for the better.

Sure but typically that’s a small part of the field. With AI it’s a majority, that’s the difference.

No, it is the majority in every field.

Specialists are always in the minority, that is like part of their definition.

The majority of every field is fraudsters? Seriously?

Is it really a grift when you are selling possible value to an investor who would make money from possible value?

As in, there is no lie, investors know it’s a gamble and are just looking for the gamble that everyone else bets on, not that it l would provide real value.

I would classify speculation as a form of grift. Someone gets left holding the bag.

I don’t think I’ve heard a lot of actual research in the AI area not connected to machine learning (which may be just one component, not really necessary at that).

I agree, but these researchers/scientists should be more mindful about the resources they use up in order to generate the computational power necessary to carry out their experiments. AI is good when it gets utilized to achieve a specific task, but funneling a lot of money and research towards general purpose AI just seems wasteful.

I mean general purpose AI doesn’t cap out at human intelligence, of which you could utilize to come up with ideas for better resource management.

Could also be a huge waste but the potential is there… potentially.

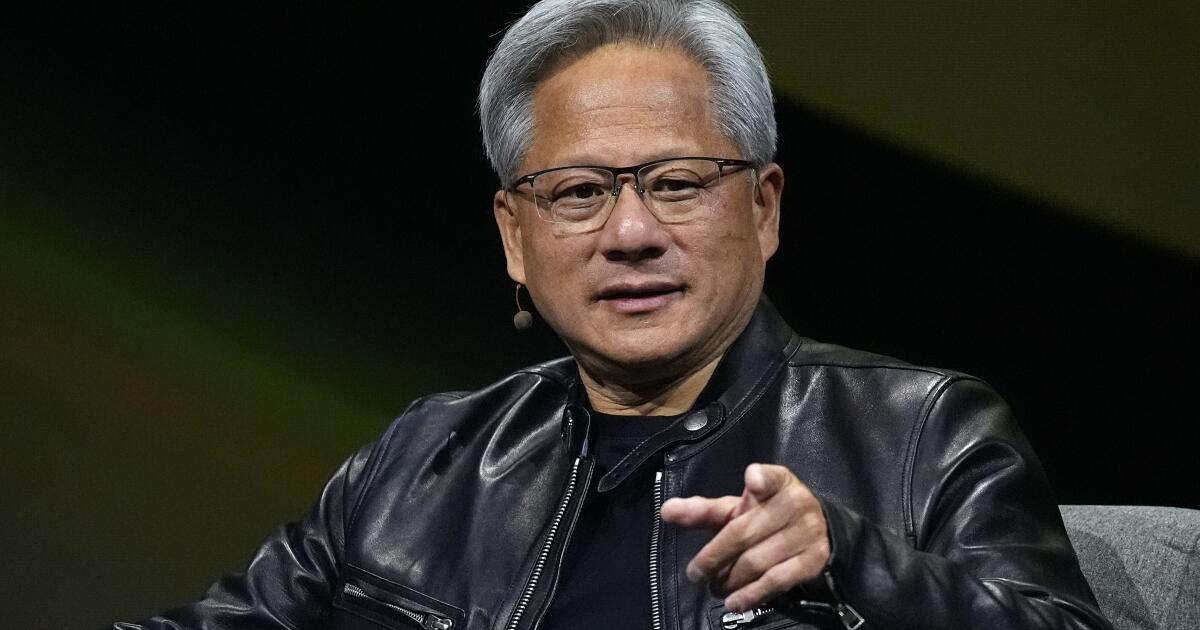

But the trillion dollar valued Nvidia…

$2.5T currently to be exact

Nvidia is diversified in AI, though. Disregarding LLM, it’s likely that other AI methodologies will depend even more on their tech or similar.

Maybe we can have normal priced graphics cards again.

I’m tired of people pretending £600 is a reasonable price to pay for a mid range GPU.

I’m not sure, these companies are building data centers with so many gpus that they have to be geo located with respect to the power grid because if it were all done in one place it would take the grid down.

And they are just building more.

But the company doesn’t have the money. Stock value means investor valuation, not company funds.

Once a company goes public for the very first time, it’s getting money into its account, but from then on forward, that’s just investors speculating and hoping on a nice return when they sell again.

Of course there should be some correlation between the company’s profitability and the stock price, so ideally they do have quite some money, but in an investment craze like this, the correlation is far from 1:1. So whether they can still afford to build the data centers remains to be seen.

They’re not building them for themselves, they’re selling GPU time and SuperPods. Their valuation is because there’s STILL a lineup a mile long for their flagship GPUs. I get that people think AI is a fad, and it’s public form may be, but there’s thousands of GPU powered projects going on behind closed doors that are going to consume whatever GPUs get made for a long time.

Their valuation is because there’s STILL a lineup a mile long for their flagship GPUs.

Genuinely curious, how do you know where the valuation, any valuation, come from?

This is an interesting story, and it might be factually true, but as far as I know unless someone has actually asked the biggest investor WHY they did bet on a stock, nobody why a valuation is what it is. We might have guesses, and they might even be correct, but they also change.

I mentioned it few times here before but my bet is yes, what you did mention BUT also because the same investors do not know where else do put their money yet and thus simply can’t jump boats. They are stuck there and it might again be become they initially though the demand was high with nobody else could fulfill it, but I believe that’s not correct anymore.

but I believe that’s not correct anymore.

Why do you believe that? As far as I understand, other HW exists…but no SW to run on it…

Right, and I mentioned CUDA earlier as one of the reason of their success, so it’s definitely something important. Clients might be interested in e.g Google TPU, startups like Etched, Tenstorrent, Groq, Cerebras Systems or heck even design their own but are probably limited by their current stack relying on CUDA. I imagine though that if backlog do keep on existing there will be abstraction libraries, at least for the most popular ones e.g TensorFlow, JAX or PyTorch, simply because the cost of waiting is too high.

Anyway what I meant isn’t about hardware or software but rather ROI, namely when Goldman Sachs and others issue analyst report saying that the promise itself isn’t up to par with actual usage for paying customers.

Those reports might effect investments from the smaller players, but the big names(Google, Microsoft, Meta, etc.) are locked in a race to the finish line. So their investments will continue until one of them reaches the goal…[insert sunk cost fallacy here]…and I think we’re at least 1-2 years from there.

Edit: posted too soon

Well, I’m no stockologist, but I believe when your company has a perpetual sales backlog with a 15-year head start on your competition, that should lead to a pretty high valuation.

I’m also no stockologist and I agree but I that’s not my point. The stock should be high but that might already have been factored in, namely this is not a new situation, so theoretically that’s been priced in since investors have understood it. My point anyway isn’t about the price itself but rather the narrative (or reason, as the example you mention on backlog and lack of competition) that investors themselves believe.

Yeah, someone else commented with their financials and they look really good, so while I certainly agree that they are overvalued because we are in an AI training bubble, I don’t see it popping for a few years, especially given that they are selling the shovels. every big player in the space is set on orders of magnitude of additional compute for the next 2 years or more. It doesn’t matter if the company they sold gpus to fails if they already sold them. Something big that unexpected would have to happen to upset that trajectory right now and I don’t see it because companies are in the exploratory stage of ai tech so no one knows what doesn’t work until they get the computer they need. I could be wrong, but that’s what I see as a watcher of ai news channels on YouTube.

The co founder of open AI just got a billion dollars for his new 3 month old AI start up. They are going to spend that money on talent and compute. X just announced a data center with 100,000 gpus for grok2 and plans to build the largest in the world I think? But that’s Elon, so grains of salt and all that are required there. Nvidia are working with robotics companies to make AI that can train robots virtually to do a task and in the real world a robot will succeed first try. No more Boston dynamics abuse compilation videos. Right now agentic ai workflow is supposed to be the next step, so there will be overseer ai algorithms to develop and train.

All that is to say there is a ton of work that requires compute for the next few years.

{Opinion here} – I feel like a lot of people are seeing grifters and a wobbly gpt4o launch and calling the game too soon. It takes time to deliver the next product when it’s a new invention in its infancy and the training parameters are scaling nearly logarithmically from gen to gen.

I’m sure the structuring of payment for the compute devices isn’t as simple as my purchase of a gaming GPU from microcenter, but Nvidia are still financially sound. I could see a lot of companies suffering from this long term but nvidia will be The player in AI compute, whatever that looks like, so they are going to bounce back and be fine.

Couldn’t agree more. There is quite a bit of AI vaporware but NVIDIA is the real stuff and will weather whatever storm comes with ease.

I think they’re going to be bankrupt within 5 years. They have way too much invested in this bubble.

Fall in share price, yes.

Bankrupt, no. Their debt to Equity Ratio is 0.1455. They can pay off their $11.23 B debt with 2 months of revenue. They can certainly afford the interest payments.

I highly doubt that. If the AI bubble pops, they’ll probably be worth a lot less relative to other tech companies, but hardly bankrupt. They still have a very strong GPU business, they probably have an agreement with Nintendo on the next Switch (like they did with the OG Switch), and they could probably repurpose the AI tech in a lot of different ways, not to mention various other projects where they package GPUs into SOCs.

It really depends on how much they’ve invested in building AI chips.

Sure, but their deliveries have also been incredibly large. I’d be surprised if they haven’t already made enough from previous sales to cover all existing and near-term investments into AI. The scale of the build-out by big cloud firms like Amazon, Google, and Microsoft has been absolutely incredible, and Nvidia’s only constraint has been making enough of them to sell. So even if support completely evaporates, I think they’ll be completely fine.

They don’t build the chips at all. They pay tsmc.

NVIDIA uses of AI technology aren’t going to pop, things like DLSS are here to stay. The value of the company and their sales are inflated by the bubble, but the core technology of NVIDIA is applicable way beyond the chat bot hype.

Bubbles don’t mean there’s no underlying value. The dot com bubble didn’t take down the internet.

Pop pop!

Magnitude!

Argument!