I already host multiple services via caddy as my reverse proxy. Jellyfin, I am worried about authentication. How do you secure it?

I’ve put it behind WireGuard since only my wife and I use it. Otherwise I’d just use Caddy or other such reverse proxy that does https and then keep Jellyfin and Caddy up to date.

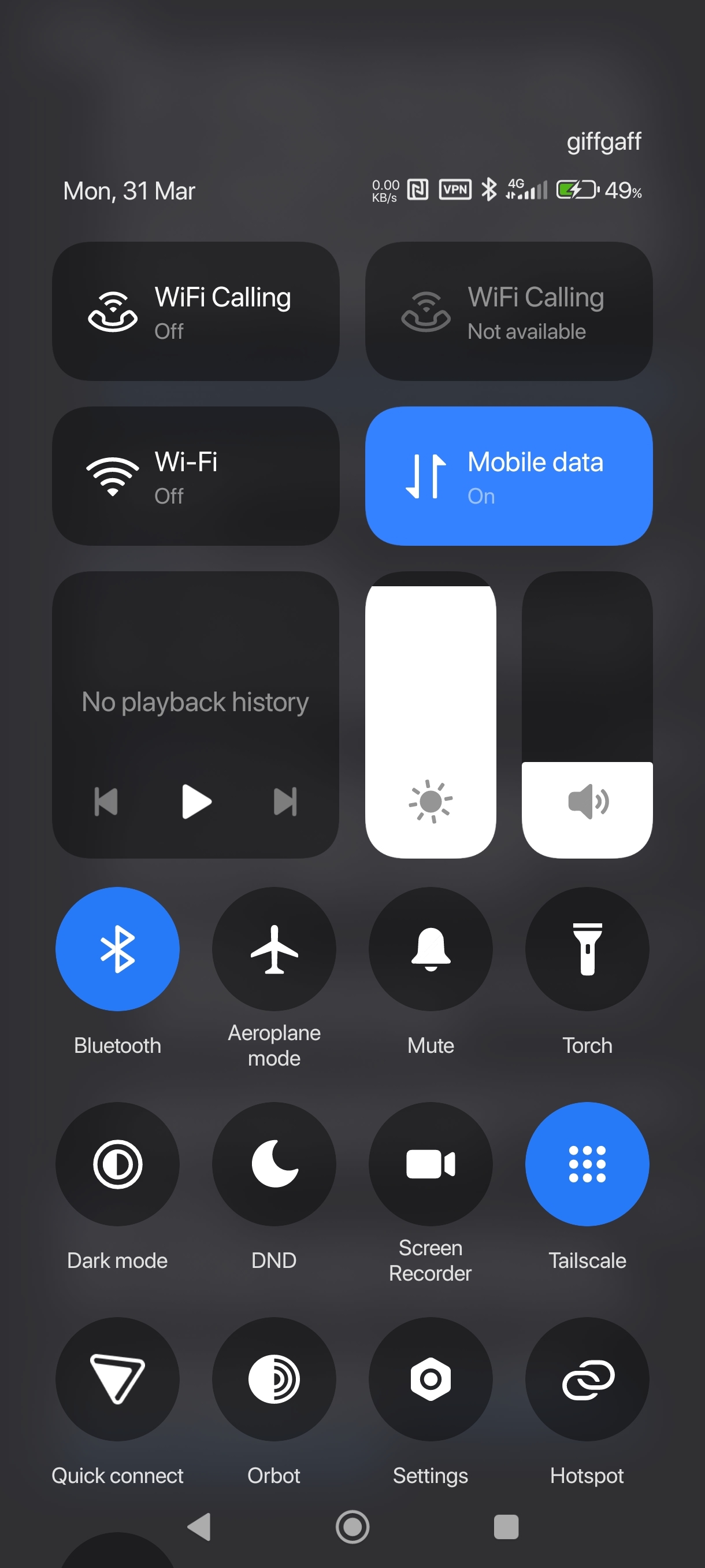

Use a VPN like Tailscale

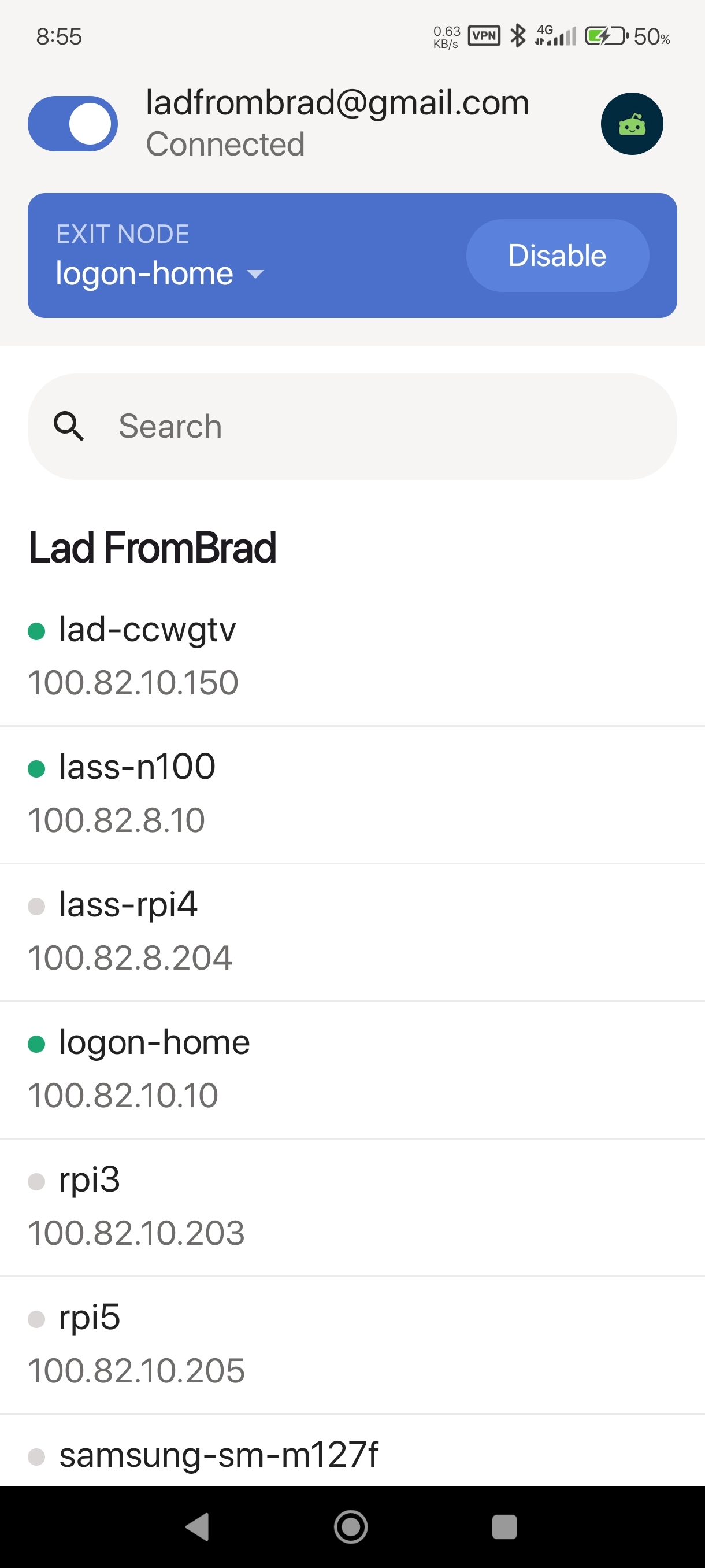

I am using tailscale but I went a little further to let my family log in with their Gmail( they will not make any account for 1 million dollars)

Tailscale funneled Jellyfin Keycloak (adminless)

Private Tailscale Keycloak admin Postgres dB

I hook up jellyfin to Keycloak (adminless) using the sso plugin. And hook Keycloak up (using the private instance) to use Google as an identity provider with a private app.

SSO plugin is good to know about. Does that address any of the issues with security that someone was previously talking about?

I’d say it’s nearly as secure as

basic authentication. If you restrict deletion to admin users and use role (or group) based auth to restrict that jellyfin admin ability to people with strong passwords in keycloak, i think you are good. Still the only risk is people could delete your media if an adminusers gmail is hacked.

Will say it’s not as secure as restricting access to vpn, you could be brute forced. Frankly it would be preferable to set up rate limiting, but that was a bridge too far for me

I set mine up with Authelia 2FA and restricted media deletion to one user: The administrator.

All others arent allowed to delete. Not even me.

Is it just you that uses it, or do friends and family use it too?

The best way to secure it is to use a VPN like Tailscale, which avoids having to expose it to the public internet.

This is what I do for our security cameras. My wife installed Tailscale on her laptop and phone, created an account, and I added her to my Tailnet. I created a home screen icon for the Blue Iris web UI on her phone and mentioned to her, “if the cameras don’t load, open Tailscale and make sure it’s connected”. Works great - she hasn’t complained about anything at all.

If you use Tailscale for everything, there’s no need to have a reverse proxy. If you use Unraid, version 7 added the ability to add individual Docker containers to the Tailnet, so each one can have a separate Tailscale IP and subdomain, and thus all of them can run on port 80.

if the cameras don’t load, open Tailscale and make sure it’s connected

I’ve been using Tailscale for a few months now and this is my only complaint. On Android and macOS, the Tailscale client gets randomly killed. So it’s an extra thing you have to manage.

It’s almost annoying enough to make me want to host my services on the actual internet… almost… but not yet.

Try WG Tunnel instead. It will reconnect on loss, but you lose the Tailscale features (no big deal with dynamic DNS)

I use plain wireguard on me phone, always on essentially with no issues. I wonder why tailscale app can’t stay open.

I suspect that it goes down and stays down whenever there is an app update, but I haven’t confirmed it yet.

Does the plain wireguard app stay up during updates?

Android wireguard all hasn’t been updated in 18mo. Its extremely simple with a small code base. There basically isn’t anything to update. It uses wireguard kernel module which is itself is only like 700 lines of code. It so simple that it basically became stable very quickly and there is nothing left of update right now.

https://git.zx2c4.com/wireguard-android/about/

I personally get the from obtainium to bypass play store

Same, wireguard with the 'WG Tunnel" app, which adds conditional Auto-Connect. If not on home wifi, connect to the tunnel.

I just stay connected to wireguard even at home, only downside is the odd time I need to chromecast, it needs to be shut off.

Can you add a split tunnel for just the Chromecast app (I presume that’s how it works idk I don’t use Chromecast) so that just that specific app always ignores your VPN?

I haven’t tried it, but the app has the ability to select which app it tunnels.

When you make a new tunnel, it says “all applications” if you click on that you can select specific ones to include or exclude

I can stay connected, still works, but I don’t think I need the extra hoops.

Oh shit, you may have just solved my only issue with Symfonium

conditional Auto-Connect. If not on home wifi, connect to the tunnel.

You don’t need this with Tailscale since it uses a separate IP range for the tunnel.

Edit: Tailscale (and Wireguard) are peer-to-peer rather than client-server, so there’s no harm leaving it connected all the time, and hitting the VPN IPs while at home will just go over your local network.

The one thing you probably wouldn’t do at home is use an exit node, unless you want all your traffic to go through another node on the Tailnet.

I also have a different subnet for WG. Not sure I understand what you’re saying…

If you have a separate subnet for it, then why do you only want it to be connected when you’re not on home wifi? You can just leave it connected all the time since it won’t interfere with accessing anything outside that subnet.

One of the main benefits of Wireguard (and Tailscale) is that it’s peer-to-peer rather than client-server. You can use the VPN IPs at home too, and it’ll add barely any overhead.

(leaving it connected is assuming you’re not routing all your traffic through one of the peers)

My network is not publicly accessible. I can only access the internal services while connected to my VPN or when I’m physically at home. I connect to WG to use the local DNS (pihole) or to access the selfhosted stuff. I don’t need to be connected while I’m at home… In a way, I am always using the home DNS.

Maybe I’m misunderstanding what you’re saying…

deleted by creator

Yeah my wife and I are both on Android, and I haven’t been able to figure out why it does that.

The Android client is open-source so maybe someone could figure it out. https://github.com/tailscale/tailscale-android

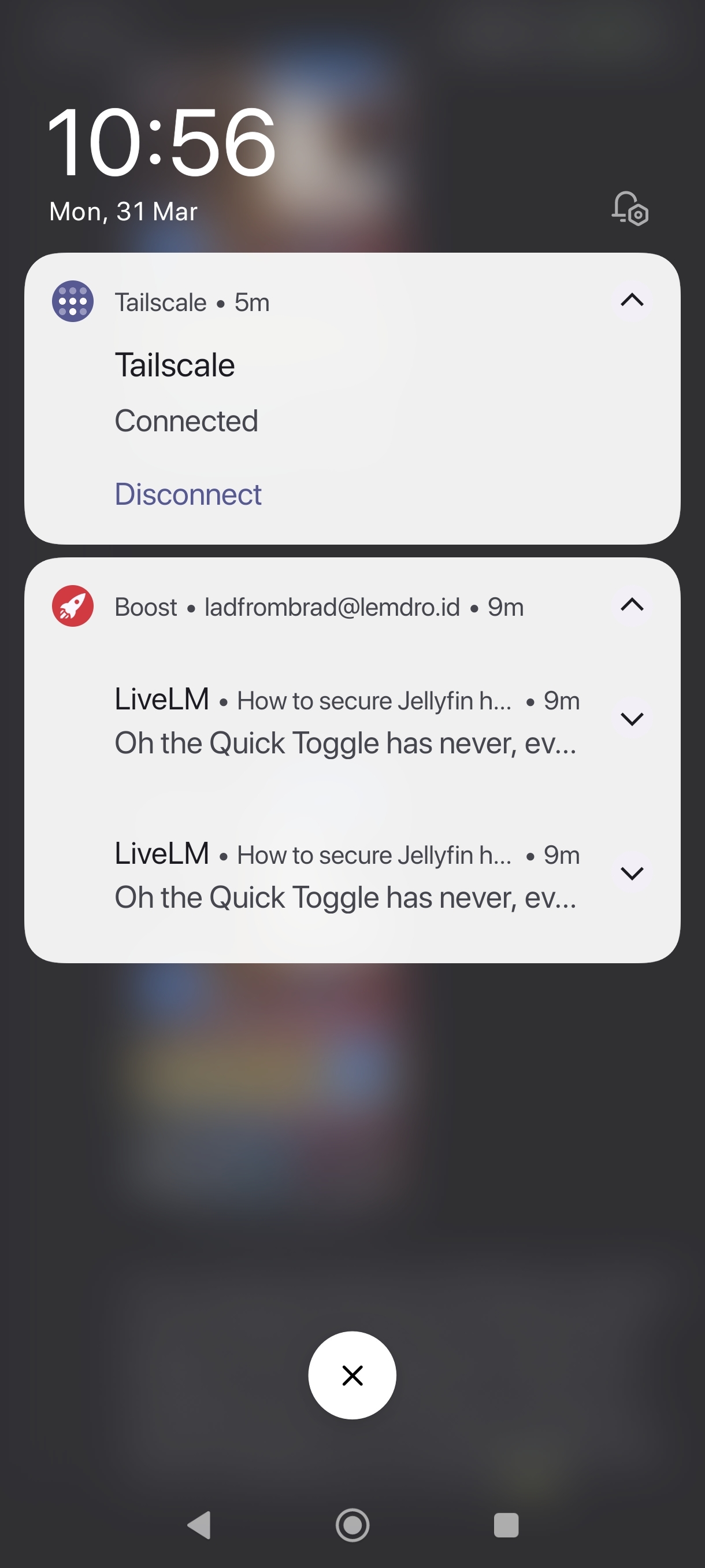

It loses its foreground notification I’ve found that kills it for me

even thou the Quick Toggle and the app itself, shows as running

even thou the Quick Toggle and the app itself, shows as running

If I disconnect/reconnect the notification comes back, and I’ve found something even more weird on my device (A Xiaomi with its infamous OOM / background app killer…) is Tailscale still actually works fine most of the time without the foreground notification. I’m hazarding a 70% of the time for me?

A lot of us a while back found v1.5.2 fugged around with the persistent notification going RIP

Oh the Quick Toggle has never, ever worked correctly. I hoped they fixed it after the UI refresh update but unfortunately not yet.

deleted by creator

Oh the Quick Toggle has never, ever worked correctly. I hoped they fixed it after the UI refresh update but unfortunately not yet.

What device/ROM are you using?

It’s been very iffy for me on and off from Miui > HyperHyperOS, but just checking now?

Works fine

Like I say, the foreground notification seemed to be the lifeline to some of us using it and keeping it alive, even after IIRC some more restrictions came in with future versions of Android (forgive me, I’m very lazy these days and just skim Mishaal’s TG feed 😇)?

e: also

comment ;)

comment ;)For me it’s always been busted both on AOSP and Miui/HyperOS…

Huh. The nearest I have to an actual “AOSP” device is my King Kong Cubot phone that has probably the cleanest version of “stock Android” I’ve ever seen, and I’m going to presume you mean like a Google Pixel / Graphene etc?

Tailscale and the QS tile / notification was solid on that Cubot but to be honest, I’ve barely turned it on these days and is now one of those drawer phones.

Miui / HyperHyperOS though is a different kettle of fish and exempting Tailscale from its App lel Killer does seem to work. 70-80%ish…

But there is something that just fuggs up and turn it off/on like most thingys I own 🙈

Works great and has been for some time on my P7P.

Ensure you’ve allowed background usage and turn off manage app if unused.

Keep the notification on and allow notifications.

Have you tried disabling battery optimization for tailscale?

I did this and it still seems to randomly disconnect.

Look up your phone on dontkillmyapp.com and make sure tailscale is excluded from battery and network “optimization”.

Maybe headscale will do better?

Headscale is a replacement for the coordination servers, which are only used to distribute configs and help nodes find each other. It won’t change client-side behaviour.

If you make Tailscale your VPN in Android it will never be killed. Mileage may vary depending on flavor of Android. I’ve used this on stock Pixel and GrapheneOS.

Under Settings > Network and internet > VPN

Tap the Cog icon next to Tailscale and select Always-on VPN.

Holy moly, I did not know this existed! Thanks! Just turned this on!

deleted by creator

Jellyfin is secure by default, as long as you have https. Just chose a secure passwordNo, it isn’t.

EDIT: I quickly want to add that Jellyfin is still great software. Just please don’t expose it to the public web, use a VPN (Wireguard, Tailscale, Nebula, …) instead.

Wtf. Thank you

Some of these are bonkers. The argument not to fix them because of backwards compatibility is even wilder. Which normal client would need the ability to get data for any other account that it hasn’t the Auth token for.

Just make a different API prefix that’s secure and subject to change, and once the official clients are updated, deprecate the insecure API (off by default).

That way you preserve backwards compatibility without forcing everyone to be insecure.

Even just basic API versioning would be sufficient. .NET offers a bunch of ways to handle breaking changes in APIs

I wouldn’t say “great” it’s ok software. Not even due to all of those security things which is a nightmare too. They do things like break the search speed months ago and not have any idea why, it’s so insanely slow and on top of that it somehow lags the entire client when searching too, not just the server which is the only thing doing the query. Lots of issues just with that.

Oh boy. Nope. My friends gonna have to fiddle with a VPN, forget exposing JF to the outside…

wireguard honestly takes like 30 seconds to do once you learn how to use it.

I use good ol’ obscurity. My reverse proxy requires that the correct subdomain is used to access any service that I host and my domain has a wildcard entry. So if you access asdf.example.com you get an error, the same for directly accessing my ip, but going to jellyfin.example.com works. And since i don’t post my valid urls anywhere no web-scraper can find them. This filters out 99% of bots and the rest are handled using authelia and crowdsec

That’s not how web scrappers work lol. No such thing as obscurity except for humans

It seems to that it works. I don’t get any web-scrapers hitting anything but my main domain. I can’t find any of my subdomains on google.

Please tell me how you believe that it works. Maybe i overlooked something…

My understanding is that scrappers check every domain and subdomain. You’re making it harder but not impossible. Everything gets scrapped

It would be better if you also did IP whitelisting, rate limiting to prevent bots, bot detection via cloudflare or something similar, etc.

Are you using HTTPS? It’s highly likely that your domains/certificates are being logged for certificate transparency. Unless you’re using wildcard domains, it’s very easy to enumerate your sub-domains.

And since i don’t post my valid urls anywhere no web-scraper can find them

You would ah… be surprised. My urls aren’t published anywhere and I currently have 4 active decisions and over 300 alerts from crowdsec.

It’s true none of those threat actors know my valid subdomains, but that doesn’t mean they don’t know I’m there.

Of course i get a bunch of scanners hitting ports 80 and 443. But if they don’t use the correct domain they all end up on an Nginx server hosting a static error page. Not much they can do there

This is how I found out Google harvests the URLs I visit through Chrome.

Got google bots trying to crawl deep links into a domain that I hadn’t published anywhere.

This is true, and is why I annoyingly have to keep robots.txt on my unpublished domains. Google does honor them for the most part, for now.

That reminds me … another annoying thing Google did was list my private jellyfin instance as a “deceptive site”, after it had uninvitedly crawled it.

A common issue it seems.

They did that with most of my subdomains

Unsurprising, but still shitty. Par for the course for the company these days.

If you’re using jellyfin as the url, that’s an easily guessable name, however if you use random words not related to what’s being hosted chances are less, e.g. salmon.example.com . Also ideally your server should reply with a 200 to * subdomains so scrappers can’t tell valid from invalid domains. Also also, ideally it also sends some random data on each of those so they don’t look exactly the same. But that’s approaching paranoid levels of security.

Tailscale is awesome. Alternatively if you’re more technically inclined you can make your own wireguard tailscale and all you need is to get a static IP for your home network. Wireguard will always be safer than each individual service.

all you need is to get a static IP for your home network

Don’t even need a static IP. Dyndns is enough.

Unless you’re behind cgnat and without ipv6 support.

cgnat

Ew

Love tailscale. The only issue I had with it is making it play nice with my local, daily driver VPN. Got it worked out tho. So, now everything is jippity jippity.

You could put authentik in front of it too

I think that breaks most clients

? How does putting something before it break it? It most certainly doesn’t.

Clients are built to speak directly to the Jellyfin API. if you put an auth service in front it won’t even ask you to try and authenticate with that.

Sorry, when out of the house I only use web not clients.

Yes, it breaks native login, but you can authenticate with Authentik on your phone for example, and use Quick connect to authorize non-browser sessions with it.

Kinda hard because they have an ongoing bug where if you put it behind a reverse proxy with basic auth (typical easy button to secure X web software on Internet), it breaks jellyfin.

Best thing is to not. Put it on your local net and connect in with a vpn

I’m not experiencing that bug. My reverse proxy is only accessed locally at the moment though. I did have to play with headers a bit in nginx to get it working.

Basic auth. The bug is if you enable basic auth.

It is enabled, but now I’m doubting that. I’ll double check when my homelab shift is complete.

It isnt. Else you wouldn’t be able to load many jellyfin assets. Because there’s a colission of the Auth header

I use fail2ban to ban IPs that fall to login and also IPs that perform common scans in the reverse proxy

also have jellyfin disable the account after a number of failed logins.

I have another site on a different port that sits behind basic auth and adds the IP to a short ipset whitelist.

So first I have to auth into that site with basic auth, then I load jellyfin on the other port.

I don’t understand how that isn’t widely deployed. I call it poor man’s Zero Trust.

I mean I’d rather jellyfin fix the bug and let me just put that behind basic auth

deleted by creator

I hate the cloudflare stuff making me do captchas or outright denying me with a burning passion. My fault for committing the heinous crime of using a VPN!

deleted by creator

Using cloudflare tunnels means nothing is encrypted and cloudflare sees all.

deleted by creator

just run wireguard on the jelly server…

Can’t use double VPN on mobile.

deleted by creator

they don’t have to figure it out, you are the one running it

deleted by creator

Doesn’t streaming media over a cloudflare tunnel/proxy violate their ToS

deleted by creator

Cloudflare is known for being unreliable with how and when it enforces the ToS (especially for paying customers!). Just because they haven’t cracked down on everyone doesn’t mean they won’t arbitrarily pick out your account from thousands of others just to slap a ban on. There’s inherent risk to it

No, they removed that clause some 2 or 3 years back.

🤫

I use Pangolin (https://github.com/fosrl/pangolin)

URL is 404

It’s not, though?

remove the brace at the end

Uhh, interesting! Thanks for sharing.

I was thinking of setting this up recntly after seeing it on Jim’s garage. Do you use it for all your external services or just jellyfin? How does it compare to a fairly robust WAF like bunkerweb?

I use it for all of my external services. It’s just wireguard and traefik under the hood. I have no familiarity with bunkerweb, but pangolin integrates with crowdsec. Specifically it comes out of the box with traefik bouncer, but it is relatively straightforward to add the crowdsec firewall bouncer on the host machine which I have found to be adequate for my needs.

So i’ve been trying to set this up this exact thing for the past few weeks - tried all manner of different Nginx/Tailscale/VPS/Traefik/Wireguard/Authelia combos, but to no avail

I was lost in the maze

However, I realised that it was literally as simple as setting up a CloudFlare Tunnel on my particular local network I wanted exposed (in my case, the Docker network that runs the JellyFin container) and then linking that domain/ip:port within CloudFlare’s Zero Trust dashboard

Cloudflare then proxies all requests to your public domain/route to your locally hosted service, all without exposing your private IP, all without exposing any ports on your router, and everything is encrypted with HTTPS by default

And you can even set up what looks like pretty robust authentication (2FA, limited to only certain emails, etc) for your tunnel

Not sure what your use case is, but as mine is shared with only me and my partner, this worked like a charm

Pay attention to your email, when cloudflare decides to warn you for this (they will, it’s very very much against TOS) they’ll send you an email, if you don’t remove the tunnel ASAP, your entire account will be terminated.

Why would Cloudflare warn me against a service they themselves offer? The email authentication is all managed by them

You’re not allowed to tunnel video traffic.

I’m pretty sure that using Jellyfin over Cloudflare tunnels is against their TOS, just FYI. I’m trying to figure out an alternative myself right now because of that.

I just moved from that. I now have Traefik on aVPS with a Wireguard server that my home router connects to (Immich IP forwarded in the config of WG).

Thanks for mentioning. I ended up using a Tailscale funnel and everything is running swimmingly so far.