I’m a retired Unix admin. It was my job from the early '90s until the mid '10s. I’ve kept somewhat current ever since by running various machines at home. So far I’ve managed to avoid using Docker at home even though I have a decent understanding of how it works - I stopped being a sysadmin in the mid '10s, I still worked for a technology company and did plenty of “interesting” reading and training.

It seems that more and more stuff that I want to run at home is being delivered as Docker-first and I have to really go out of my way to find a non-Docker install.

I’m thinking it’s no longer a fad and I should invest some time getting comfortable with it?

No. Docker is proprietary

Podman ftw!

Concur, podman doesn’t (have to) have root, and has autoupdate and podman-compose to use docker files. Containers are cool, Docker less so.

My biggest issue with podman is that podman-compose isn’t officially recommended or supported, and the alternatives (kubernetes YAML and Quadlet) kind of suck compared to using a compose file. It makes me way to pour a bunch lf work into switching to using podman-compose. I have no clue why they didn’t just use the compose spec for their official orchestration method.

once the containers are running after podman-compose you can use podman-generate-systemd to create a systemd services. Helped me move a rather large compose file to a bunch of services. My notes weren’t the best, sorry, but that’s the gist.It got me moved. I’ve now moved on to .container files for new stuff, which generates them on the fly. Need to move my old services over, but they work and who’s got the time…

How do you like the .container files? I hate the idea of having different files for each container, and each volume. They also don’t even support pods and the syntax is just terrible compared to YAML.

Not sure yet, agree it’s not as nice to look at as YAML, but at least it’s prettier than the alternative systemd.service implementation, and it’s been rock solid so far. Time will tell, I’m sure pods will come and it seems to be what redhat sees as their direction. A method for automatically generating them from docker YAML (and hopefully vice-versa) would go a looong way towards speeding adoption.

How do you feel about having to specify a different file for all of your containers and volumes? Has that annoyed you at all? I agree that pods are really nice, and they should give you a way to generate them from compose YAML.

I’ve regrettably only heard of Podman in passing. At work we use docker containers with kubernetes, is this something we could easily transition to without friction?

Podman uses the OCI standard — it is a drop-in replacement for Docker

I think it’s a good tool to have on your toolbelt, so it can’t hurt to look into it.

Whether you will like it or not, and whether you should move your existing stuff to it is another matter. I know us old Unix folk can be a fussy bunch about new fads (I started as a Unix admin in the late 90s myself).

Personally, I find docker a useful tool for a lot of things, but I also know when to leave the tool in the box.

Yes! Well, kinda. You can skip Docker and go straight to Podman, which is an open source and more integrated solution. I configure my containers as systemd services (as quadlets).

Hold up, does Podman replace Docker entirely?

I’m no expert, but as far as I can tell yes. It also seems a bit easier to have a rootless setup.

There are still edge cases, but things have improved rapidly the last year or two, to the point that most docker-compose.yaml files can be run unmodified with podman-compose.

I have however moved away from compose in favor of running containers and pods as systemd services, which I really like. If you want to try it, make sure your distro has a reasonably new version of Podman, at least v4.4 ot newer. Debian stable has an older version, so I had to use the testing repos to get quadlets working.

It depends on what you do with Docker. Podman can replace many of the core docker features, but does not ship with a Docker Desktop app (there may be one available). Also, last I checked, there were differences in the

docker buildcommand.That being said, I’m using podman at home and work, doing development things and building images must fine. My final images are built in a pipeline with actual Docker, though.

I jumped ship from Docker (like the metaphor?) when they started clamping down on unregistered users and changed the corporate license. It’s my personal middle finger to them.

Podman desktop! https://podman-desktop.io/

I, too, need to know…

Also consider Nix/NixOS, I have used Docker, Kubernetes, LXC and prefer Nix the most. Especially for home use not requiring any scaling.

Similar story to yours. I was a HP-UX and BSD admin, at some point in the 00s, I stopped self-hosting. Felt too much like the work I was paid to do in the office.

But then I decided to give it a go in the mid-10s, mainly because I was uneasy about my dependence on cloud services.

The biggest advantage of Docker for me is the easy spin-up/tear-down capability. I can rapidly prototype new services without worrying about all the cruft left behind by badly written software packages on the host machine.

I hate it very much. I am sure it is due to my limited understanding of it, but I’ve been stuck on some things that were very easy for me using VM.

We have two networks, one of which has very limited internet connectivity, behind proxy. When using VMs, I used to configure everything: code, files, settings on a machine with no restrictions; shut it down; move the VM files to the restricted network; boot and be happily on my way.

I’m unable to make this work with docker. Getting my Ubuntu server fetch its updates behind proxy is easy enough; setting it for python Pip is another level; realising the specific python libraries need special keys to work around proxies is yet another; figuring out how to get it done for Docker and python under it is when I gave up. Why can it not be as simple as the VM!

Maybe I’m not looking using the right terms or maybe I should go and learn docker “properly”, but there is no doubt that using Docker is much more difficult for my use case than using VMs.

You are right. Either you are using docker very wrong, or docker is not meant for this use case.

You say “getting ubuntu server to fetch it’s updated behind proxy”. You shouldn’t be updating Ubuntu from inside of docker. Instead, the system should be somewhat immutable. You should configure the version you want to use as part of the Docker file.

Same with python and keys. You likely want to install python dependencies in the Dockerfile, so that you can install them once and it becomes bundled into the container image. Then you don’t need to use pip behind the proxy.

I’m not much into new year resolutions, but I think I’ll make a conscious effort to learn Docker in the coming months. Any suggestions for good guides for someone coming from VM end will be appreciated.

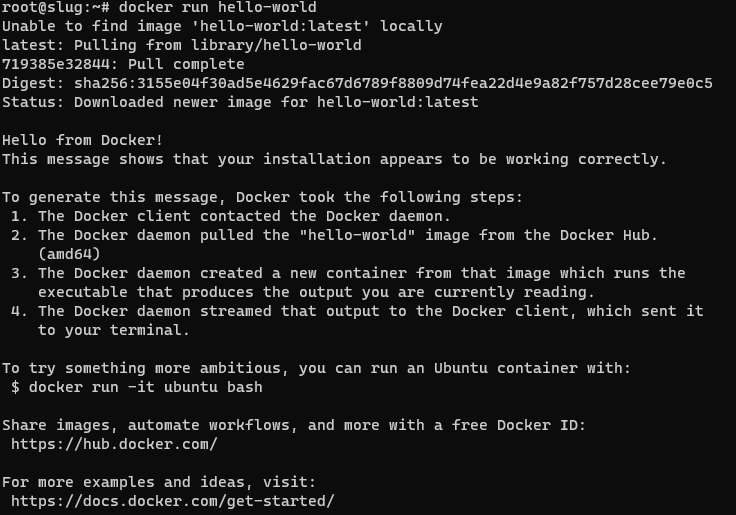

I learn by twiddling knobs and such and inspecting results, so having come from a tall stack of KVM machines to docker, this helped me become effective with a project I needed to get running quickly: https://training.play-with-docker.com/

You basically log into a remote system which has docker configured, and go through their guide to see what command is needed per action, and also indirectly gets you to grok the conceptual difference between a VM and a container.

Jump straight to the first tutorial: https://training.play-with-docker.com/ops-s1-hello/

Thank you for these links, they look just right. Most tutorials I come across these days are videos. Maybe they are easier to make. These tutorials that allow you to tinker at your own pace seem better to me. Will you mind if I reach out to you over DM if I get stuck at something while learning and am not able to find the right answer easily?

Sure, that’s ok. Thanks for asking.

The networking send to be where documentation for both docker and podman seem to be a bit slim, just a heads up.

Huh? Your docker container shouldn’t be calling pip for updates at runtime, you should consider a container immutable and ephemeral. Stop thinking about it as a mini VM. Build your container (presumably pip-ing in all the libraries you require) on the machine with full network access, then export or publish the container image and run it on the machine with limited access. If you want updates, you regularly rebuild the container image and repeat.

Alternatively, even at build time it’s fairly easy to use a proxy with docker, unless you have some weird proxy configuration. I use it here so that updates get pulled from a local caching proxy, reducing my internet traffic and making rebuilds quicker.

I think I’m not aware of the exporting/publishing part and that’s the cause of my woes. I get everything running on the machine with unrestricted access, move to the machine with restricted access go “docker compose up” and get stuck. I’ll read up on exporting/publishing, thank you.

As a casual self-hoster for twenty years, I ran into a consistent pattern: I would install things to try them out and they’d work great at first; but after installing/uninstalling other services, updating libraries, etc, the conflicts would accumulate until I’d eventually give up and re-install the whole system from scratch. And by then I’d have lost track of how I installed things the first time, and have to reconfigure everything by trial and error.

Docker has eliminated that cycle—and once you learn the basics of Docker, most software is easier to install as a container than it is on a bare system. And Docker makes it more consistent to keep track of which ports, local directories, and other local resources each service is using, and of what steps are needed to install or reinstall.

i use it for gitea, nextcloud, redis, postgres, and a few rest servers and love it!, super easy

it can suck for things like homelab stablediffusion and things that require gpu or other hardware.

As someone who does AI for a living: GPU+docker is easy and reliable. Especially if you compare it to VMs.

good to hear. maybe I should try again

postgres

I never use it for databases. I find I don’t gain much from containerizing it, because the interesting and difficult bits of customizing and tayloring a database to your needs are on the data file system or in kernel parameters, not in the database binaries themselves. On most distributions it’s trivial to install the binaries for postgres/mariadb or whatnot.

Databases are usually fairly resource intensive too, so you’d want a separate VM for it anyway.

Very good points.

In my case I just need to for a couple users with maybe a few dozen transactions a day; it’s far from being a bottleneck and there’s little point in optimizing it further.

Containerizing it also has the benefit of boiling all installation and configuration into one very convenient dockercompose file… Actually two. I use one with all the config stuff that’s published to gitea and one that has sensitive data.

You should try it.

I would never go back installing something without docker. Never.

For a lot of smaller things I feel that Docker is overkill, or simply not feasible (package management, utilities like screenfetch, text editors, etc…) but for larger apps it definitely makes it easier once you wrap your head around containerization.

For example, I switched full-time to Jellyfin from Plex and was attempting to use caddy-docker-proxy to forward the host network that Jellyfin uses to the Caddy server, but I couldn’t get it to work automatically (explicitly defining the reverse proxy in the Caddyfile works without issue). I thought it would be easier to just install it natively, but since I hadn’t installed it that way in a few years I forgot that it pulls in like 30-40 dependencies since it’s written in .Net (or would that be C#?) and took a good few minutes to install. I said screw that and removed all the deps and went back to using the container and just stuck with the normal version of Caddy which works fine.

dude, im kinda you. i just jumped into docker over the summer… feel stupid not doing it sooner. there is just so much pre-created content, tutorials, you name it. its very mature.

i spent a weekend containering all my home services… totally worth it and easy as pi[hole] in a container!.

Well, that wasn’t a huge investment :-) I’m in…

I understand I’ve got LOTS to learn. I think I’ll start by installing something new that I’m looking at with docker and get comfortable with something my users (family…) are not yet relying on.

If you are interested in a web interface for management check out portainer.

Forget docker run,

docker compose up -dis the command you need on a server. Get familiar with a UI, it makes your life much easier at the beginning: portainer or yacht in the browser, lazy-docker in the terminal.I would suggest docker compose before a UI to someone that likes to work via the command line.

Many popular docker repositories also automatically give docker run equivalents in compose format, so the learning curve is not as steep vs what it was before for learning docker or docker compose commands.

There is even a tool to convert Docker Run commands to a Docker Compose file :)

Such as this one hosted by Opnxng:

https://it.opnxng.com/docker-run-to-docker-compose-converter

# docker compose up -d no configuration file provided: not foundlike just

docker runby itself, it’s not the full command, you need a compose file: https://docs.docker.com/engine/reference/commandline/compose/Basically it’s the same as docker run, but all the configuration is read from a file, not from stdin, more easily reproducible, you just have to store those files. The important is compose commands are very important for selfhosting, when your containers expected to run all the time.

Yeah, I get it now. Just the way I read it the first time it sounded like you were saying that was a complete command and it was going to do something “magic” for me :-)

you need to create a docker-compose.yml file. I tend to put everything in one dir per container so I just have to move the dir around somewhere else if I want to move that container to a different machine. Here’s an example I use for picard with examples of nfs mounts and local bind mounts with relative paths to the directory the docker-compose.yml is in. you basically just put this in a directory, create the local bind mount dirs in that same directory and adjust YOURPASS and the mounts/nfs shares and it will keep working everywhere you move the directory as long as it has docker and an available package in the architecture of the system.

`version: ‘3’ services: picard: image: mikenye/picard:latest container_name: picard environment: KEEP_APP_RUNNING: 1 VNC_PASSWORD: YOURPASS GROUP_ID: 100 USER_ID: 1000 TZ: “UTC” ports: - “5810:5800” volumes: - ./picard:/config:rw - dlbooks:/downloads:rw - cleanedaudiobooks:/cleaned:rw restart: always volumes: dlbooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:NFSPATH”

cleanedaudiobooks: driver_opts: type: “nfs” o: “addr=NFSSERVERIP,nolock,soft” device: “:OTHER NFSPATH” `

dockge is amazing for people that see the value in a gui but want it to stay the hell out of the way. https://github.com/louislam/dockge lets you use compose without trapping your stuff in stacks like portainer does. You decide you don’t like dockge, you just go back to cli and do your docker compose up -d --force-recreate .

Second this. Portainer + docker compose is so good that now I go out of my way to composerize everything so I don’t have to run docker containers from the cli.

As a guy who’s you before summer.

Can you explain why you think it is better now after you have ‘contained’ all your services? What advantages are there, that I can’t seem to figure out?

Please teach me Mr. OriginalLucifer from the land of MoistCatSweat.Com

You can also back up your compose file and data directories, pull the backup from another computer, and as long as the architecture is compatible you can just restore it with no problem. So basically, your services are a whole lot more portable. I recently did this when dedipath went under. Pulled my latest backup to a new server at virmach, and I was up and running as soon as the DNS propagated.

No more dependency hell from one package needing

libsomething.so 5.3.1and another service absolutely can only run withlibsomething.so 4.2.0That and knowing that when i remove a container, its not leaving a bunch of cruft behind

Modularity, compartmentalization, reliability, predictability.

One software needs MySQL 5, another needs mariadb 7. A third service needs PHP 7 while the distro supported version is 8. A fourth service uses cuda 11.7 - not 11.8 which is what everything in your package manager uses. a fifth service’s install was only tested on latest Ubuntu, and now you need to figure out what rpm gives the exact library it expects. A sixth service expects odbc to be set up in a very specific way, but handwaves it in the installation docs. A seventh program expects a symlink at a specific place that is on the desktop version of the distro, but not the server version. And then you got that weird program that insist on admin access to the database so it can create it’s own user. Since I don’t trust it with that, let it just have it’s own database server running in docker and good riddance.

And so on and so forth… with docker not only is all this specified in excruciating details, it’s also the exact same setup on every install.

You don’t have it not working on arch because the maintainer of a library there decided to inline a patch that supposedly doesn’t change anything, but somehow causes the program to segfault.

I can develop a service on windows, test it, deploy it to my Kubernetes cluster, and I don’t even have to worry about which machine to deploy it on, it just runs it on a machine. Probably an Ubuntu machine, but maybe on that Gentoo node instead. And if my osx friend wants to try it out, then no problem. I can just give him a command, and it’s running on his laptop. No worries about the right runtime or setting up environment or libraries and all that.

If you’re an old Linux admin… This is what utopia looks like.

Edit: And restarting a container is almost like reinstalling the OS and the program. Since the image is static, restarting the container removes all file system cruft too and starts up a pristine new copy (of course except the specific files and folders you have chosen to save between restarts)

It sounds very nice and clean to work with!

If I’m lucky enough to get the Raspberry 5 at Christmas, I will try to set it up with docker for all my services!

Thanks for the explanation.

Just remember that Raspberry is an ARM cpu, which is a different architecture. Docker can cross compile to it, and make multiple images automatically. It takes more time and space though, as it runs an arm emulator to make them.

https://www.docker.com/blog/faster-multi-platform-builds-dockerfile-cross-compilation-guide/ has some info about it.

Yes, you should. I would look into docker compose as it makes deployments very easy.

It’s quite easy to use once you get the hang of it. In most situations, it’s the prefered option because you can just have your docker container, choose where relevant files are allowing you to properly isolate your applications. Or on single purpose servers, it makes deployment of applications and maintaining dependencies significantly easier.

At the very least, it’s a great tool to add to your toolbox to use as needed.Yes. Let me give you an example on why it is very nice: I migrated one of my machines at home from an old x86-64 laptop to an arm64 odroid this week. I had a couple of applications running, 8 or 9 of them, all organized in a docker compose file with all persistent storage volumes mapped to plain folders in a directory. All I had to do was stop the compose setup, copy the folder structure, install docker on the new machine and start the compose setup. There was one minor hickup since I forgot that one of the containers was built locally but since all the other software has arm64 images available under the same name, it just worked. Changed the host IP and done.

One of the very nice things is the portability of containers, as well as the reproducibility (within limits) of the applications, since you divide them into stateless parts (the container) and stateful parts (the volumes), definitely give it a go!

Why wouldn’t you want to use containers? I’m curious. What do you use now? Ansible? Puppet? Chef?

Not OP, but, seriously asking, why should I? I usually still use VMs for every app i need. Much more work I assume, but besides saving time (and some overhead and mayve performance) what would I gain from docker or other containers?

VMs have a ton of overhead compared to Docker. VMs replicate everything in the computer while Docker just uses the host for everything, except it sandboxes the apps.

In theory, VMs are far more secure since they’re almost entirely isolated from the host system (assuming you don’t have any of the host’s filesystems attached), they are also OS agnostic whereas Docker is limited to the OS it runs on.

Ah ok thanks, the security-aspect is indeed important to me. So I shouldn’t really use it for critical things. Especially those with external access.

Docker is still secure, it’s just less secure than Virtualization. It’s like a standard door knob lock (the twist/push button kind) vs a deadbolt. Both will keep 90% of bad-actors out but those who really want to get in can based on how high the security is.

what would I gain from docker or other containers?

Reproducability.

Once you’ve built the Dockerfile or compose file for your container, it’s trivial to spin it up on another machine later. It’s no longer bound to the specific VM and OS configuration you’ve built your service on top of and you can easily migrate containers or move them around.

But that’s possible with a vm too. Or am I missing something here?

Apart from the dependency stuff, what you need to migrate when you use docker-compose is just a text file and the volumes that hold the data. No full VMs that contain entire systems because all that stuff is just recreated automatically in seconds on the new machine.

Ok, that does save a lot of overhead and space. Does it impact performance compared to a vm?

If anything, containers are less resource intensive than VMs.

Thank you. Guess i really need to take some time to get into it. Just never saw a real reason.

If you update your OS, it could happen that a changed dependency breaks your app. This wouldn’t happen with docker, as every dependency is shipped with the application in the container.

Ah okay. So it’s like an escape from dependancy-hell… Thanks.

Saves time, minimal compatibility, portability and you can update with 2 commands There’s really no reason not to use docker

But I can’t really tinker IN the docker-image, right? It’s maintained elsewhere and I just get what i got. But with way less tinkering? Do I have control over the amount/percentage of resources a container uses? And could I just freeze a container, move it to another physical server and continue it there? So it would be worth the time to learn everything about docker for my “just” 10 VMs to replace in the long run?

You can tinker in the image in a variety of ways, but make sure to preserve your state outside the container in some way:

- Extend the image you want to use with a custom Dockerfile

- Execute an interactive shell session, for example

docker exec -it containerName /bin/bash - Replace or expose filesystem resources using host or volume mounts.

Yes, you can set a variety of resources constraints, including but not limited to processor and memory utilization.

There’s no reason to “freeze” a container, but if your state is in a host or volume mount, destroy the container, migrate your data, and resume it with a run command or docker-compose file. Different terminology and concept, but same result.

It may be worth it if you want to free up overhead used by virtual machines on your host, store your state more centrally, and/or represent your infrastructure as a docker-compose file or set of docker-compose files.

Hm. That doesn’t really sound bad. Thanks man, I guess I will take some time to read into it. Currently on proxmox, but AFAIK it does containers too.

It’s really not! I migrated rapidly from orchestrating services with Vagrant and virtual machines to Docker just because of how much more efficient it is.

Granted, it’s a different tool to learn and takes time, but I feel like the tradeoff was well worth it in my case.

I also further orchestrate my containers using Ansible, but that’s not entirely necessary for everyone.

I only use like 10 VMs, guess there’s no need for overkill with additional stuff. Though I’d like a gui, there probably is one for docker? Once tested a complete os with docker (forgot the name) but it seemed very unfriendly and ovey convoluted.

One of the things I like about containers is how central the IaC methodology is. There are certainly tools to codify VMs, but with Docker, right out of the gate, you’ll be defining your containers through a Dockerfile, or docker-compose.yml, or whatever other orchestration platform. With a VM, I’m always tempted to just make on the fly config changes directly on the box, since it’s so heavy to rebuild them, but with containers, I’m more driven to properly update the container definition and then rebuild the container. Because of that, you have an inherent backup that you can easily push to a remote git server or something similar. Maybe that’s not as much of a benefit if you have a good system already, but containers make it easier imo.

Actually only tried a docker container once tbh. Haven’t put much time into it and was kinda forced to do. So, if I got you right, I do define the container with like nic-setup or ip or ram/cpu/usage and that’s it? And the configuration of the app in the container? is that IN the container or applied “onto it” for easy rebuild-purpose? Right now I just have a ton of (big) backups of all VMs. If I screw up, I’m going back to this morning. Takes like 2 minutes tops. Would I even see a benefit of docker? besides saving much overhead of cours.

You don’t actually have to care about defining IP, cpu/ram reservations, etc. Your docker-compose file just defines the applications you want and a port mapping or two, and that’s it.

Example:

--- version: "2.1" services: adguardhome-sync: image: lscr.io/linuxserver/adguardhome-sync:latest container_name: adguardhome-sync environment: - CONFIGFILE=/config/adguardhome-sync.yaml volumes: - /path/to/my/configs/adguardhome-sync:/config ports: - 8080:8080 restart: - unless-stoppedThat’s it, you run

docker-compose upand the container starts, reads your config from your config folder, and exposes port 8080 to the rest of your network.Oh… But that means I need another server with a reverse-proxy to actually reach it by domain/ip? Luckily caddy already runs fine 😊

Thanks man!

Most people set up a reverse proxy, yes, but it’s not strictly necessary. You could certainly change the port mapping to

8080:443and expose the application port directly that way, but then you’d obviously have to jump through some extra hoops for certificates, etc.Caddy is a great solution (and there’s even a container image for it 😉)

Lol…nah i somehow prefer at least caddy non-containerized. Many domains and ports, i think that would not work great in a container with the certificates (which i also need to manually copy regularly to some apps). But what do i know 😁

Currently no virtualisation at all - just my OS on bare metal with some apps installed. Remember, this is a single machine sitting in my basement running Samba and a couple of other things - there’s not much to orchestrate :-)

Oh, I thought you had multiple machines.

I use docker because each service I use requires different libraries with different versions. With containers, that doesn’t matter. It also provides some rudimentary security. If an attacker gets in, they’ll have to break out of the container first to get at the rest of the system. Each container can run with a different user, so even if they do get out of the container, at worst they’ll be able to destroy the data they have access to - well, they’ll still see other stuff in the network, but I think it’s better than being straight pwned.

It makes deployments a lot easier once you have the groundwork laid (writing your compose files). If you ever need to nuke the OS reinstalling and configuring 20+ apps can only take a few minutes (assuming you still have the config data, which should live outside of the container).

For example, setting up my mediaserver, webserver, SQL server, and usenet suit of apps can take a few hours to do natively. Using Docker Compose it takes one command and about 5-10 minutes. Granted, I had to spend a few hours writing the compose files and testing everything, along with storing the config data, but just simply backing up the compose files with git means I can pull everything down quickly. Even if I don’t have the config files anymore it probably only takes like an hour or less to configure everything.